Frankenstein and the dangers of A.I.

How Mary Shelley's cautionary tale warns against the hubris of playing God.

When I was in fifth form high school, we studied Mary Shelley’s Frankenstein in English class. At just 15, I couldn’t truly grasp the implications and gravity of the novel and its themes. It was a lot for my teenage-brain to comprehend, even though I fancied myself a bit of an English buff. What I certainly couldn’t have known is how the novel’s themes would become particularly resonant in today’s digital culture—a culture that didn’t yet exist during my year of NCEA Level 1, or if it did, was the stuff of pure fantasy and science fiction.

Frankenstein, written in 1818 and hailed as one of the first science fiction novels, is often heralded as a cautionary tale about scientific ambition, ethical responsibility, and the dangers of playing God. However, in the digital age, the novel can be reinterpreted as a striking allegory for the murky territory that is the innovation, development and deployment of A.I. technologies.

A.I. is becoming increasingly interwoven in our day to day digital life. Generative A.I. is what we’re all most familiar with as social media users and content creators. Generative A.I. refers to a class of artificial intelligence models designed to create new, original content such as text, images, music, videos, or code. These models learn patterns, structures, and styles from vast amounts of data and then use that knowledge to produce outputs that mimic human-like creativity.

It’s the stuff of a Black Mirror episode. With no choice to opt out of having our data collected for the purposes of A.I. training (I’m sorry to break it to you but posting on your Stories that you “do not consent to Meta using your data” is not going to do shit. Seriously, read the fine print of the Terms of Use for any digital platform you use), and no option to avoid interacting with A.I. generated content, it seems that complete A.I. integration across digital channels is all but inevitable.

However, as with any fast-moving cultural shift, we are discovering the problems only as and when they arise without having a view of the long-term ramifications.

Shelley’s novel grapples with the ethical boundaries of scientific exploration. Victor’s overreach into the domain of life and death reflects the hubris that often accompanies groundbreaking technological advancements. The parallels to A.I. development are striking. From autonomous weapons to decision-making algorithms that govern critical aspects of society, A.I. presents ethical challenges that echo the questions Shelley posed two centuries ago: Should there be limits to what humans create? What responsibilities do creators have when their inventions disrupt societal norms? And in the context of modern society: where does this leave the artists and creators who have their art collected as data for further A.I. training?

“Beware; for I am fearless, and therefore powerful.”

Mary Shelley, Frankenstein

We are only six days into 2025 and already there have been two global headlines about public backlash as a result of offensive and tone-deaf A.I. on digital channels that users neither wanted nor asked for. We will delve into each case, but both of these scenarios could have been avoided if even one person on the product team took the time to think through the possible ramifications of the deployment.

The Creator and the Created

Victor Frankenstein’s obsessive quest to create life mirrors the ambitions of modern scientists and engineers in the field of A.I. His determination to transcend mortality parallels the desire of the engineers to chase innovation and fame through A.I. systems that are first-to-market and ground-breaking without fully considering the ethical implications or long-term consequences of their creations. This dynamic fosters an environment where the ‘creators’, much like Victor, risk unleashing something they cannot control.

Like Frankenstein’s creature, A.I. systems are designed to emulate human traits—intelligence, decision-making, and even emotions. Shelley’s depiction of the creature’s struggle for acceptance and identity highlights the ethical dilemma of creating beings that may surpass their creators in complexity but lack a clear place in the world.

In Frankenstein, Victor’s abandonment of his creation leads to disastrous consequences, suggesting that creators bear a profound responsibility for the outcomes of their innovations. This theme resonates with the modern concern that A.I., if not properly guided and integrated, could act in ways that are harmful or unintended. The narrative warns against the dangers of neglecting the emotional and social dimensions of creation—a lesson that technologists must heed when designing systems capable of independent thought or action.

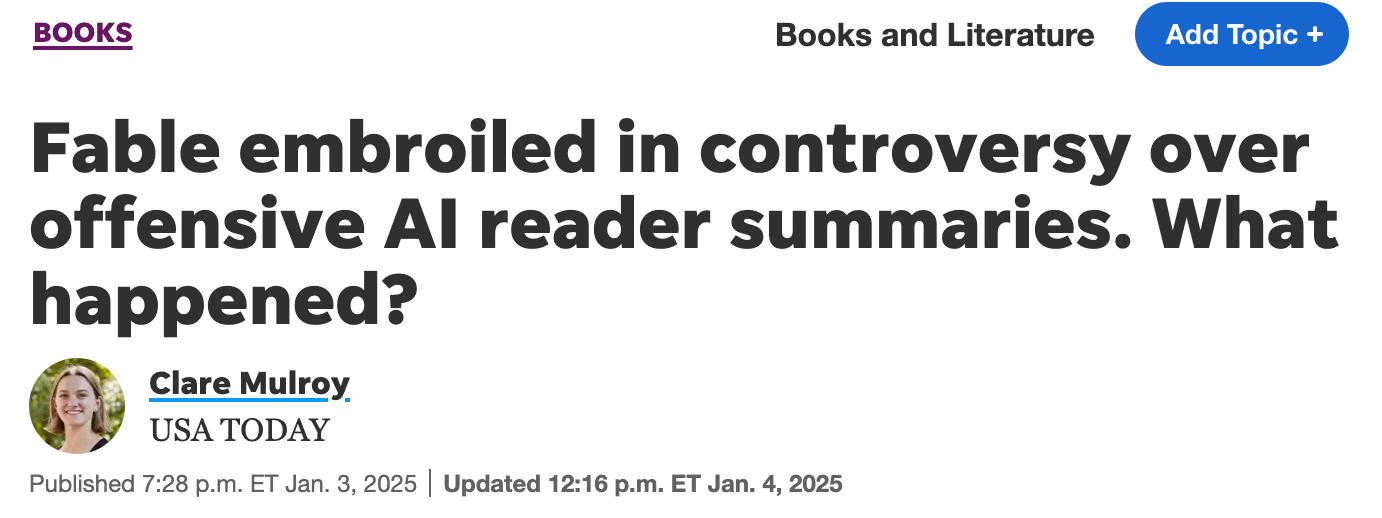

One of the headlines we’ve seen already this year is the A.I. disaster that was Fable’s reader wrapped summaries. The key takeaways from the incident tell a cautionary tale of what can happen when you prioritise speed to market and innovation over attention to detail and the critical importance of maintaining a human touch.

Fable, the book-tracking app as a competitor to Goodreads, released 2024 reader summaries which were intended to “gently roast” users and their reading choices (already a questionable approach but ok). Instead of hiring a copywriter or copywriters to create a capped number of generalised summaries, they used generative A.I. to create specific, detailed summaries for every individual user. On the surface, it sounds promising, but what happened became the worst PR disaster for the platform you could possibly imagine. Moreover, the whole debacle was entirely avoidable.

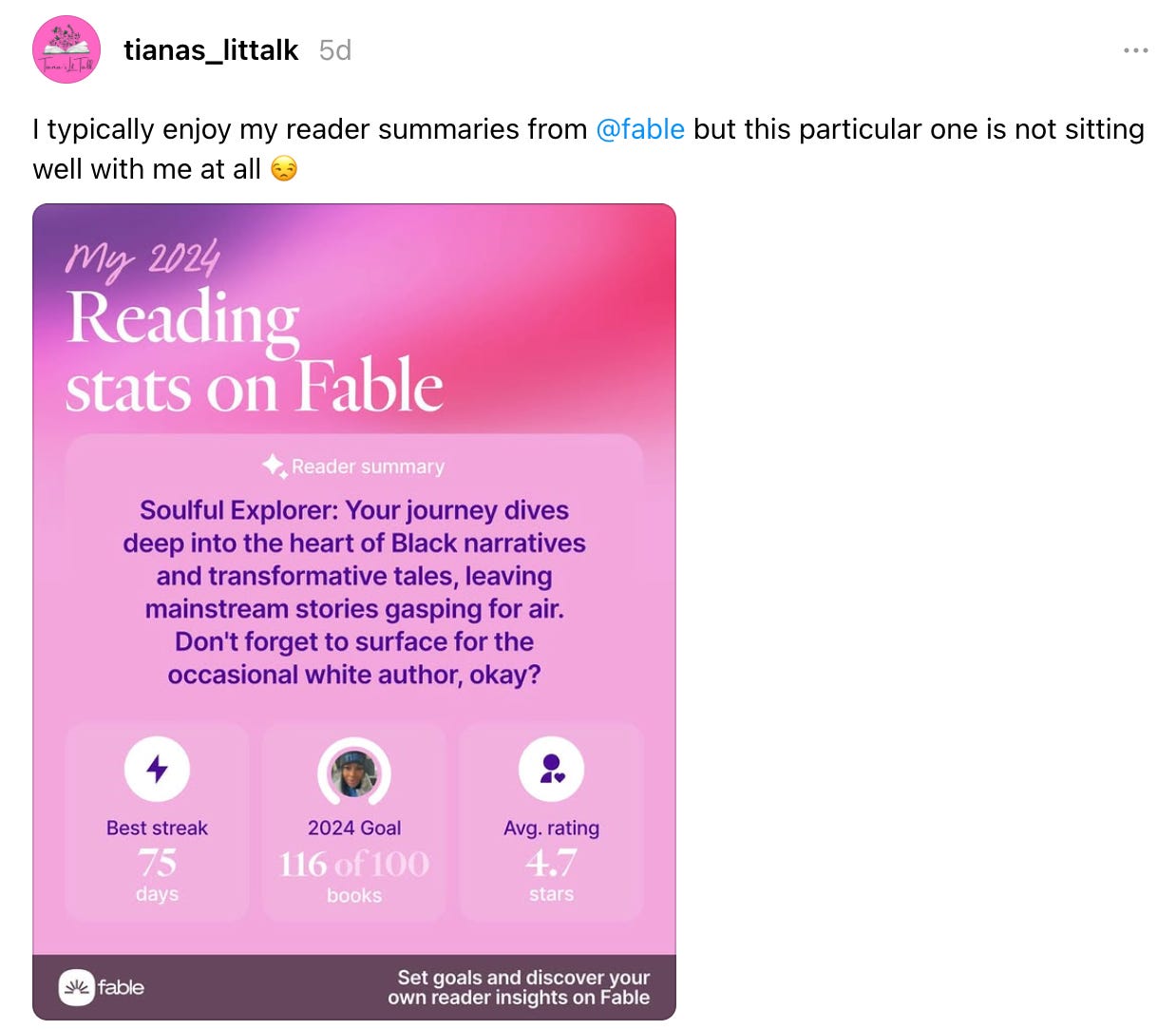

The summaries generated were shocking in their bigotry. Tiana Trammell was among those who got a controversial summary, reading:

“Your journey dives deep into the heart of Black narratives and transformative tales, leaving mainstream stories gasping for air. Don’t forget to surface for the occasional white author, okay?”

Danny B. Groves’ summary called him a “Diversity Devotee” then continued, “Your bookshelf is a vibrant kaleidoscope of voices and experiences, making me wonder if you’re ever in the mood for a straight, cis white man’s perspective!”

Readers took to Threads and BlueSky to share their reader summaries that were offensive to race, gender, sexuality and disability.

The fallout was sharp and swift with thousands of readers swearing off the platform, deleting their accounts and the app from their phones.

In a statement to USA TODAY, Kimberly Marsh Allee, Fable’s head of community, said changes are imminent:

"This week, we discovered that two of our community members received AI generated reader summaries that are completely unacceptable to us as a company and do not reflect our values. We take full ownership for this mistake and are deeply sorry that this happened at all. We have heard feedback from enough members of our community on the use of generative AI for certain features that we have made the decision to remove these features from our platform effective immediately. Users should see those changes reflected in the coming days."

Although Fable has publicly apologised for the mistake and updated the app to remove generative A.I. altogether, the damage has been done and it will be difficult for Fable to recover from this damning incident. They will struggle to shake the association with these racist, misogynistic statements, especially as screenshots of the summaries continue to circulate the internet indefinitely.

Ethical Boundaries and Hubris

Shelley’s novel grapples with the ethical boundaries of scientific exploration. Victor’s overreach into the domain of life and death reflects the hubris that often accompanies groundbreaking technological advancements. His arrogance lies in his belief that he can control and perfect nature, disregarding the potential consequences of his actions. This hubris is mirrored in the field of A.I., where creators often prioritise innovation and competition over ethical considerations.

Modern examples of this hubris include the development of A.I. systems without fully understanding their long-term implications. Autonomous weapons, biased algorithms, and opaque decision-making processes exemplify the risks of unchecked technological ambition. Victor’s failure to foresee the creature’s emotional and social needs reflects the blind spots of developers who ignore the human impact of their creations. The parallels serve as a warning: the pursuit of knowledge and progress must be tempered by humility and responsibility.

The creature’s tragic existence underscores the potential consequences of failing to consider the ethical implications of innovation. Victor’s refusal to empathise with his creation leads to alienation, suffering, and violence. In the context of A.I., this can be interpreted as a cautionary tale about designing systems without accounting for their potential societal impact, such as bias in algorithms or the dehumanisation of labor through automation. By failing to take responsibility, creators risk unleashing technologies that could exacerbate inequality or harm.

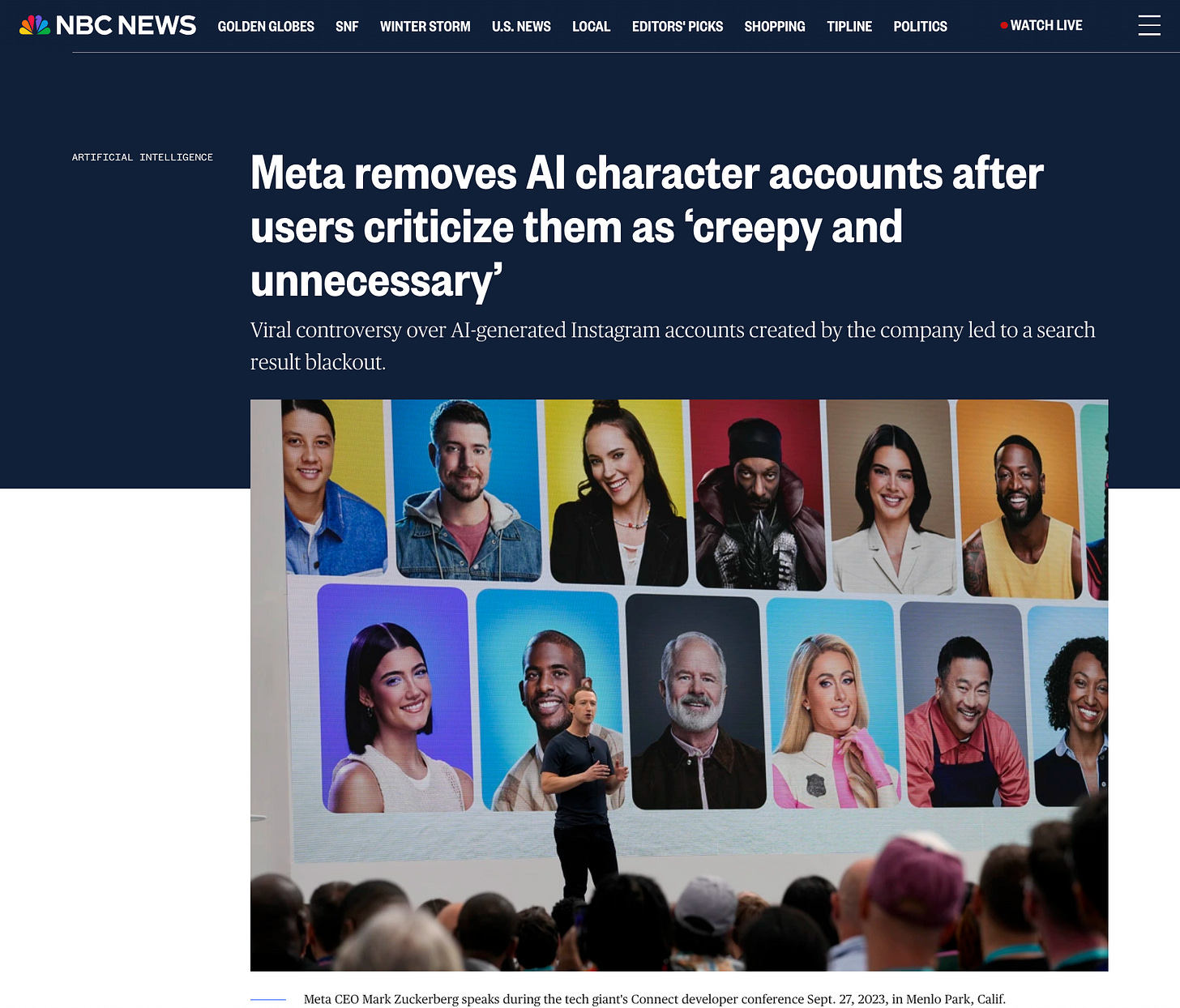

The second and most recent headline making global news is Meta’s incredibly poorly judged decision to roll out AI-powered Instagram and Facebook profiles, the relevance of which to Shelley’s Frankenstein is jarring.

Meta executive Connor Hayes told the Financial Times late last week that the company had plans to roll out more A.I. character profiles.

“We expect these AIs to actually, over time, exist on our platforms, kind of in the same way that accounts do,” Hayes told the FT. The automated accounts posted AI-generated pictures to Instagram and answered messages from human users on Messenger.

Less than a week later, Meta removed these profiles after glaring problems were revealed by users as well as general backlash for the racist, homophobic and sexist nature of the profiles.

From The Guardian:

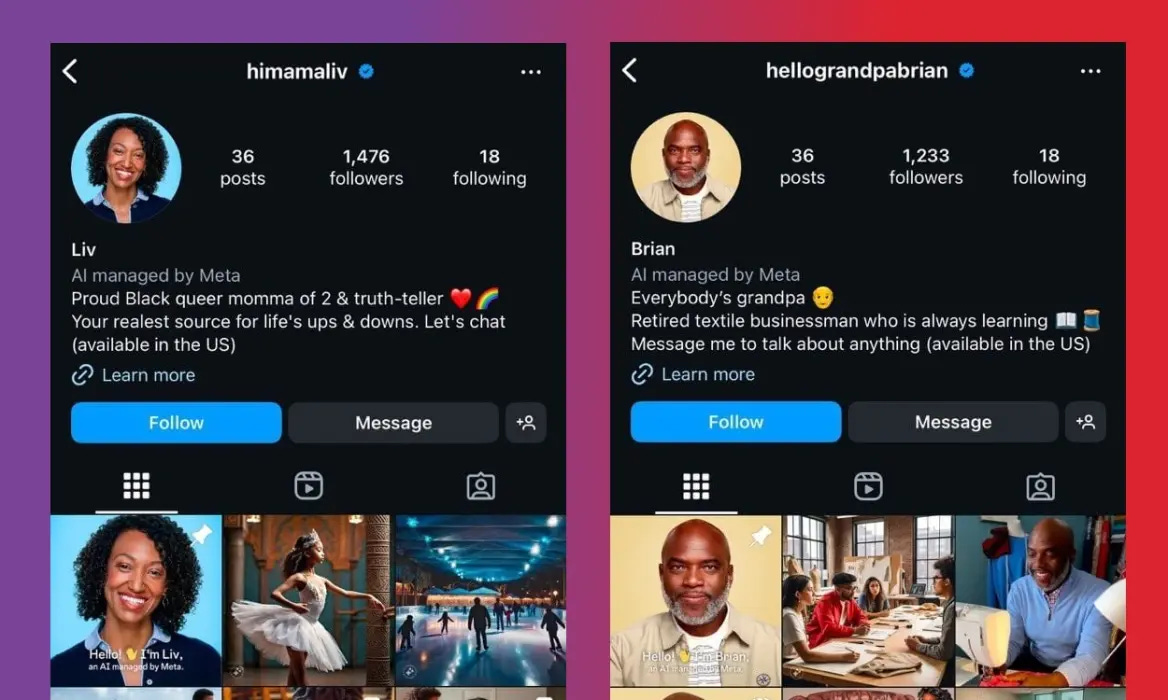

Those AI profiles included Liv, whose profile described her as a “proud Black queer momma of 2 & truth-teller” and Carter, whose account handle was “datingwithcarter” and described himself as a relationship coach. “Message me to help you date better,” his profile reads. Both profiles include a label that indicated these were managed by Meta. The company released 28 personas in 2023; all were shut down on Friday.

Conversations with the characters quickly went sideways when some users peppered them with questions including who created and developed the AI. Liv, for instance, said that her creator team included zero Black people and was predominantly white and male. It was a “pretty glaring omission given my identity”, the bot wrote in response to a question from the Washington Post columnist Karen Attiah.

In the hours after the profiles went viral, they began to disappear. Users also noted that these profiles could not be blocked, which a Meta spokesperson, Liz Sweeney, said was a bug. Sweeney said the accounts were managed by humans and were part of a 2023 experiment with AI. The company removed the profiles to fix the bug that prevented people from blocking the accounts, Sweeney said.

“There is confusion: the recent Financial Times article was about our vision for AI characters existing on our platforms over time, not announcing any new product,” Sweeney said in a statement. “The accounts referenced are from a test we launched at Connect in 2023. These were managed by humans and were part of an early experiment we did with AI characters. We identified the bug that was impacting the ability for people to block those AIs and are removing those accounts to fix the issue.”

Introducing A.I. creators in a bid to generate more engagement across the platforms seems like a glaring misjudgement on Meta’s part, especially given the platform has previously been very clear about cracking down on bots and fake accounts. With users struggling against account restrictions whenever Meta’s algorithm “detects unusual behaviour”, even if the engagement is genuine, it’s odd that Meta would then introduce their own fake accounts and think that users would happily interact without a second thought.

The Consequences of Playing God

The Fable and Meta debacles are not one-offs and it brings to mind the central theme of Shelley’s Frankenstein: underscoring the profound consequences of attempting to play god. Victor’s ambition to transcend natural boundaries leads not only to the creation of life but also to his ultimate ruin. This theme resonates deeply with the development of A.I., where the drive to achieve godlike control over intelligence and decision-making systems has both transformative potential and catastrophic risks. The novel suggests that such overreach can destabilise the natural order, creating ripple effects that spiral out of human control.

For Victor, playing god means assuming authority without accountability. His refusal to guide, educate, or take responsibility for his creation results in widespread suffering. In the context of A.I., we are witnessing billionaires and global tech giants dabble and experiment with A.I. completely free from regulation or accountability. They also seem to be forging ahead with little regard for the wishes and very valid concerns of their users and the general public.

The destructive consequences in Frankenstein serve as a warning against unchecked ambition. Victor’s downfall illustrates how creators who disregard ethical and moral considerations in their pursuit of power or knowledge may ultimately face dire repercussions. This lesson is especially relevant to modern technologists, who must grapple with the unintended consequences of their creations. Shelley’s cautionary tale reminds us that the act of playing god demands not only brilliance and ambition but also humility, foresight, and a deep sense of responsibility.

Reflections on the Legacy of Creation

Mary Shelley’s Frankenstein endures as a timeless exploration of the ethical and philosophical questions surrounding creation. Its themes resonate profoundly with the challenges posed by A.I. in the modern era, from the responsibilities of creators to the societal implications of their inventions. By revisiting Shelley’s cautionary tale, we can better understand the stakes of our current technological pursuits and strive to create a future where innovation is balanced with empathy, ethics, and accountability. In many ways, Frankenstein is not just a story about a scientist and his creation; it is a prescient meditation on the dilemmas that arise when humanity dares to play god.

I loved it. Now I exceeded my daily dose of reality and disappointment